First, it was competency frameworks. Then it was skill taxonomies. Both suffer from the same challenges: they’re hard to build and even harder to keep up to date.

Can AI help? Let’s find out.

Why do organizations need taxonomies?

For most companies, employees are the biggest expense (and asset). But how companies invest in those employees can quickly become disjointed. If the job description, interviewing process, onboarding, performance management, and career pathing aren’t in sync, you create a mess. It reminds me of coaching my daughter’s soccer team: I yell for kids to stay in their positions and the parents yell “Go get the ball!” The result is chaos and confusion.

Taxonomies create a shared understanding of what’s important. Companies use taxonomies to organize resources (discovery), connect people to opportunities (matching), and align activity to insights (reporting). Taxonomies can align each part of the talent lifecycle. As HR expert Dave Ulrich says: “Every discipline, to become a sustainable science, uses systematics (classification of separate items into common groups) to create taxonomies (types of things that go together) to make progress.”

Taxonomies make the ambiguous actionable. Has a business leader told employees to “improve your business acumen” and left them wondering what that even means? Are there roles your people are interested in but they don’t know the steps needed to get those roles? Taxonomies can help. Taxonomies break complex ideas or challenges into smaller, more actionable steps.

Experiment No. 1: Skills Taxonomy for a Generic Role

First, let’s create a skill taxonomy for a fairly generic role using AI. This taxonomy could serve as the backbone for writing job descriptions, guiding interview questions, building training programs, and helping form the criteria for performance management conversations. We’ll do this one as a video so you can see the process.

The taxonomy came together fast and the results look reasonable. I could easily add or remove extra layers of details (even getting into tasks within a skill). Being able to drill down let me get as granular as necessary.

This would be a great place to start a taxonomy effort.

The biggest downside is allowing the AI to make its own judgments (within the parameters of our prompt) to come up with its own skills. It doesn’t use a predefined list of skills which limits the ability of the taxonomy to create a common language across tools and applications.

The real benefit will come when the dynamic capabilities of the AI are combined with a company’s internal skills language. This will require some extra implementation, but it is definitely doable and something you should watch out for from the Degreed product team in the future.

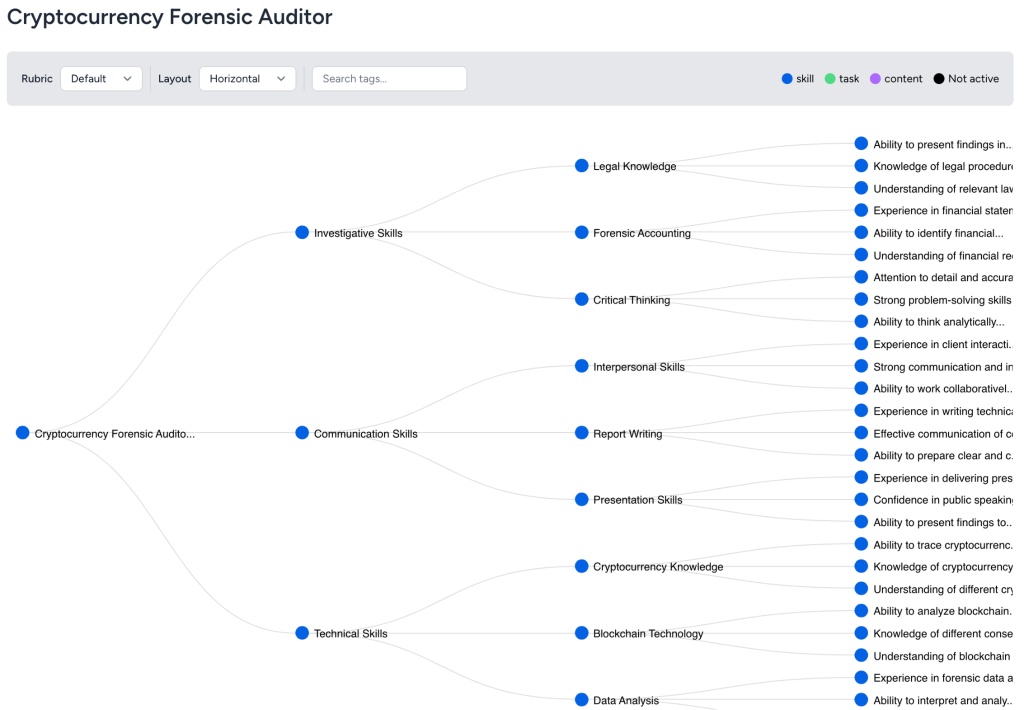

Experiment No. 2: Skills Taxonomy for a Hyper-specific Role

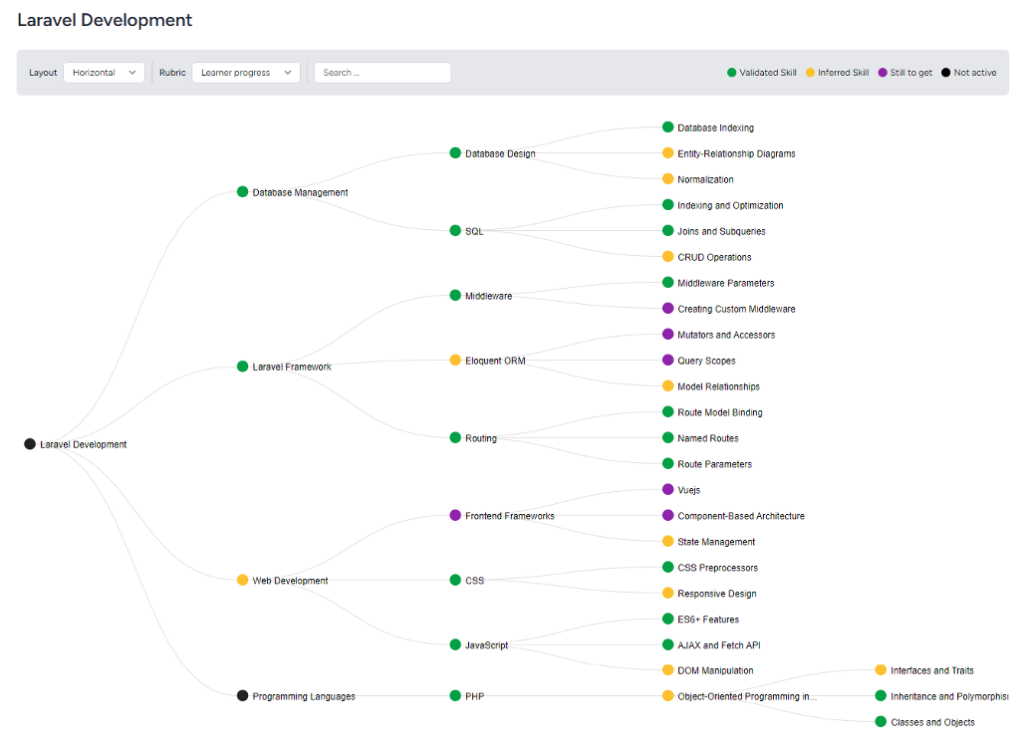

Every company has unique sets of job titles. Let’s see how well the AI does when we select a very specific role. Using the same process for our previous experiment, here’s the result:

The more detailed skills are cut off in the screenshot, but even without any edits, the results look impressive. This could be a great way to build out skill taxonomies, especially for roles that wouldn’t typically have good skill suggestions from your HR and L&D tools.

Experiment No. 3: Highlighting Skills That Are Out of Date

Keeping taxonomies up to date is such a manual, time-consuming task. Let’s see if AI can do the heavy lifting and identify which skills in a taxonomy may be out of date (e.g. it lists an old technology).

To do this we aren’t creating a new taxonomy. Instead, we’re evaluating our existing taxonomy with customizable criteria. First I’ll create a rubric with values for “Current” and “Out of Date” and then ask the AI to classify each skill according to the rubric.

Here’s a video to show the steps:

You can see that one skill—React 16—was flagged as being out of date. React is a technology library that is on version 18, so React 16 was rightfully flagged. Having a system like this would make it easy to identify when and where you would need to make updates.

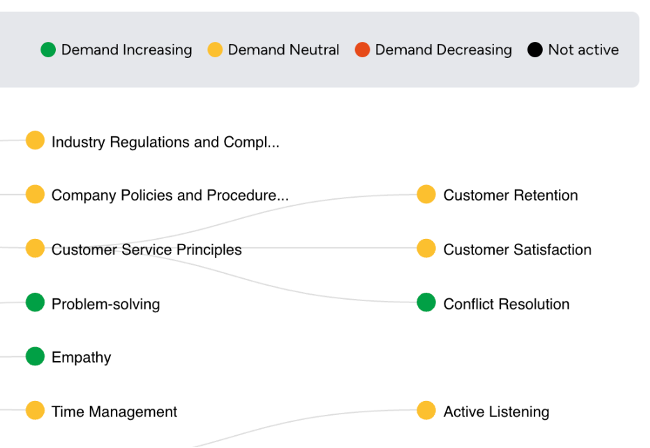

Experiment No. 4: Highlighting Increasing or Decreasing Demand for Skills

To have our taxonomies guide talent decisions, it would be helpful if we knew which skills were becoming increasingly important. Let’s create another custom rubric and see if AI can help us identify which skills are increasing, or decreasing, in demand.

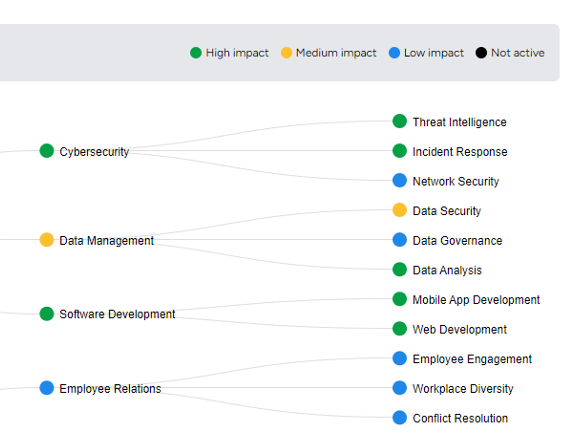

Here are the results:

One thing to remember in this type of analysis is that the training data for LLMs can be more than a year old (e.g. April 2023 for GPT-4 Turbo). There are techniques available to provide LLMs with information from search engines to give them up-to-date information. This would be especially useful when trying to make future projections.

This analysis could also be made more powerful if combined with some of your own internal data from your hiring or learning systems.

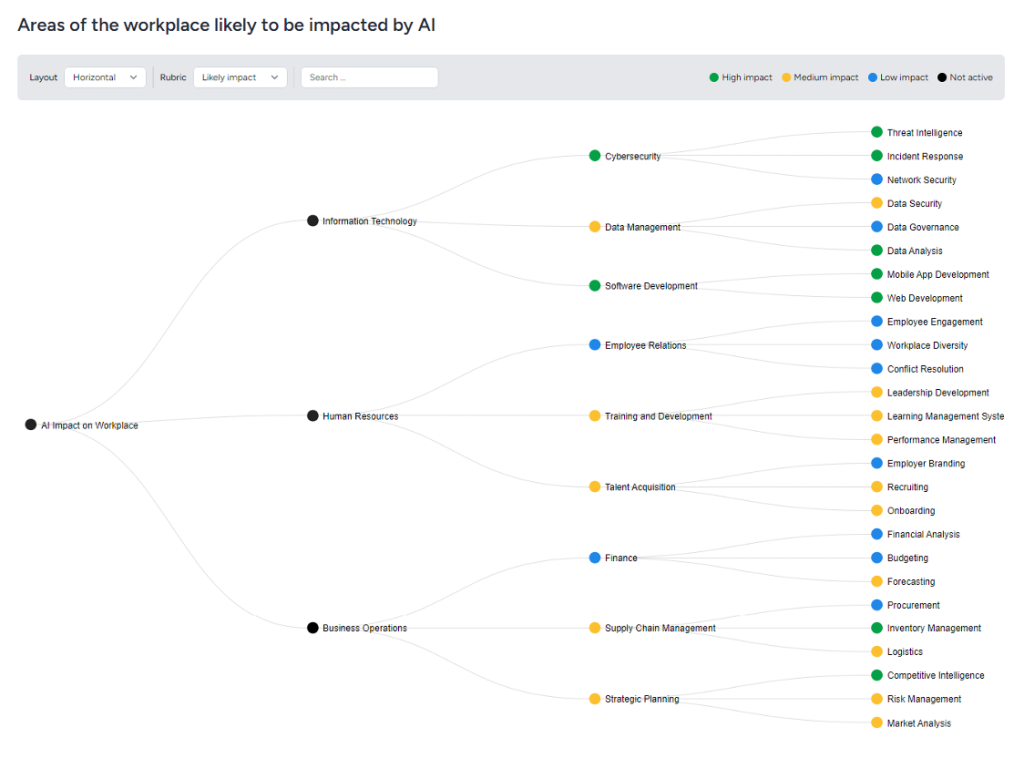

Experiment No. 5: Highlighting the Impact of AI on Skills

Upskilling takes time, which means it’s helpful to have projections on how skills will change. In this case, we want to see which skills or tasks are more or less likely to be impacted by AI. This would help us plan appropriate skilling initiatives.

After creating another custom rubric, here are the results:

Again, the results look fairly reasonable. For this type of in-depth analysis, we could likely improve the results by using different prompting techniques. Techniques such as chain-of-thought reasoning (which makes the LLM approach a problem a step at a time), multi-shot prompting (where you provide examples of good results in your prompt), or using multiple agents (having multiple LLMs work together to do the analysis) would give us even better results.

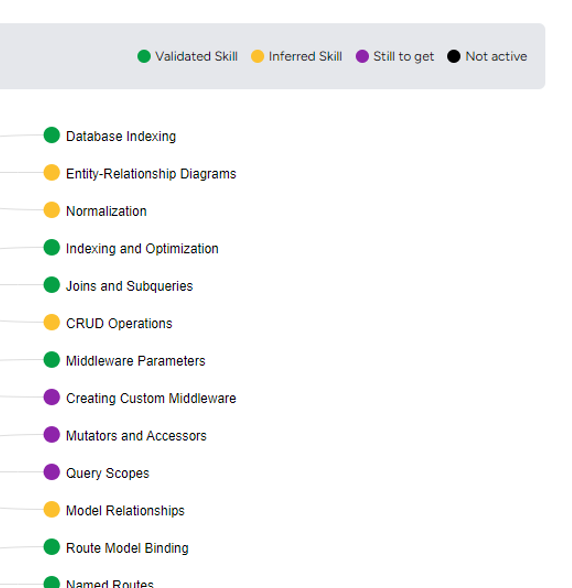

Experiment No. 6: Showing User Progress Against Skills

So far we’ve used taxonomies at the administrative level—for planning and organizing talent across the company. Let’s now explore the other benefit of taxonomies—their ability to deconstruct goals into more actionable items. This could be useful at the employee level as a way to guide learning and measure progress.

Here we’re visualizing a taxonomy that reflects an employee’s mastery level of each step.

I love this example because it highlights the power of combining AI capabilities with employee data to create something personalized that you would not get from a generic tool like ChatGPT.

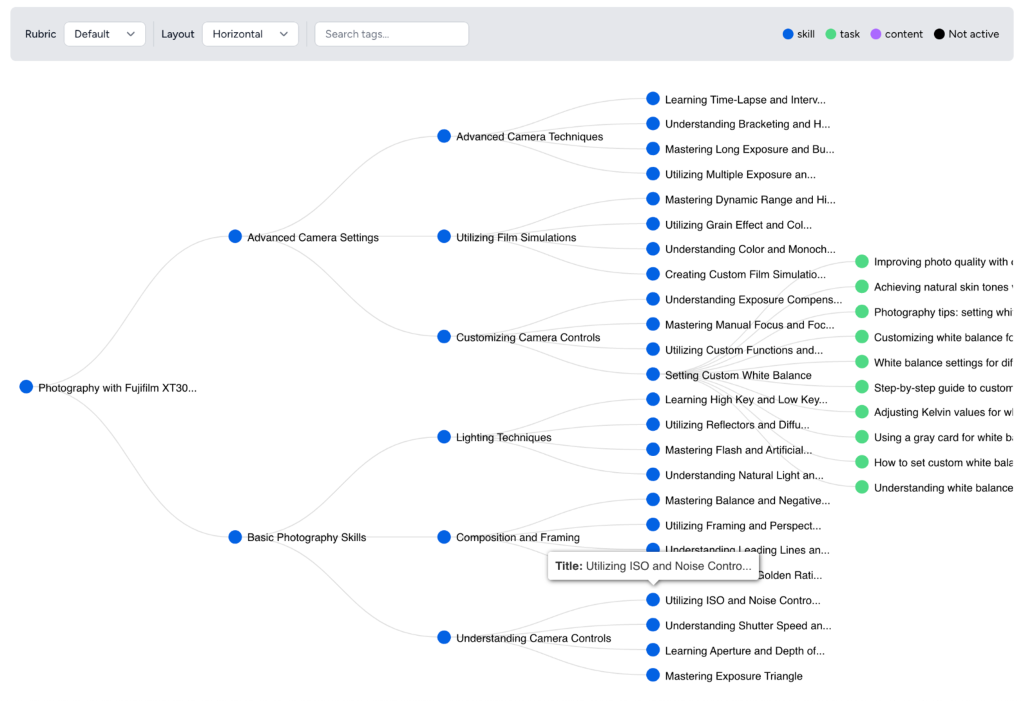

Experiment No. 7: Using Taxonomies to Guide Personal Learning

As we saw in the last experiment, taxonomies can be useful at the individual level. I’m going to use a personal use case for this next one. I’ve been trying to learn photography, but it’s hard to know where to start.

Here I’ve asked AI to create a personalized learning plan for me based on my specific camera:

This feels like a really nice outline. I would want to remove some things and go deeper in some other areas. But, now that I have this outline, I can imagine adding actions such as “Show me an example” or “Give me ideas on how to practice this.” I could even ask the system to analyze some of my photos and highlight the skills for me to improve. I could also use this outline to create an assessment that could then inform a baseline for each skill.

I think there’s a lot of potential here. This could really get us closer to personalized, dynamic skill-building.

What do you think?

Do you see potential upsides or too many challenging downsides to AI-generated taxonomies? If you have thoughts, or feedback, or would like to build your own taxonomies, please email me at tblake@degreed.com. To keep up with updates, follow me on LinkedIn.

Ready to learn more about taxonomies?

The Degreed Professional Services team offers free consultations. Its core focus is acting as a partner to you, not offering transactional services. Let’s work together to explore your learning strategy, technology goals, and questions about taxonomies.

Book a private consultation with the Degreed Professional Services team.

Thank you for experimenting with us.

We’ll see you at the next one.

This is the second post in a regular series. You can also read the first post Degreed Experiments with Emerging Technologies.